What role does visual prediction play in guiding eye and hand movements?

An emerging theme in our research is the key role of prediction both in perception and action. For example, in pursuit of moving targets humans often employ a combination of smooth pursuit movements that are interleaved with frequent saccades to reposition targets near the fovea. In that context, it is perhaps not surprising that we found the predictive post-saccadic following responses (PFR) in a simple saccade task to stationary motion apertures (Fig. 2C). PFR might reflect the automaticity of prediction that is necessary for the coordination between saccades and pursuit for motion stimuli. Indeed, previous studies of pursuit found a post-saccadic enhancement for target motion in which eye velocity matched the target at saccade landing in a predictive way, much like PFR. Natural pursuit provides an excellent framework to study prediction as well as the interaction between peripheral and foveal vision.

Ultimately our laboratory aims to study increasingly active visual behaviors, including in the long-term unrestrained visually-guided reaching movements. We expect such tasks to exhibit even stronger evidence of prediction. We have recently developed an unrestrained reach-to-grasp task that involves predictive reaching to acquire moving insects. As with the eye movements in visual pursuit, reaching for dynamic prey requires active prediction of the future target position to compensate for visuo-motor processing delays. Previous research in reaching movements with non-human primates has relied on chair-restrained ballistic movements to either stationary targets, or when studying online adjustments of movement, targets that suddenly step during the reach. Those studies find that online adjustments require a 200-300 ms visuo-motor delay. But those approaches also impose constraints that limit the natural dynamics of reaching.

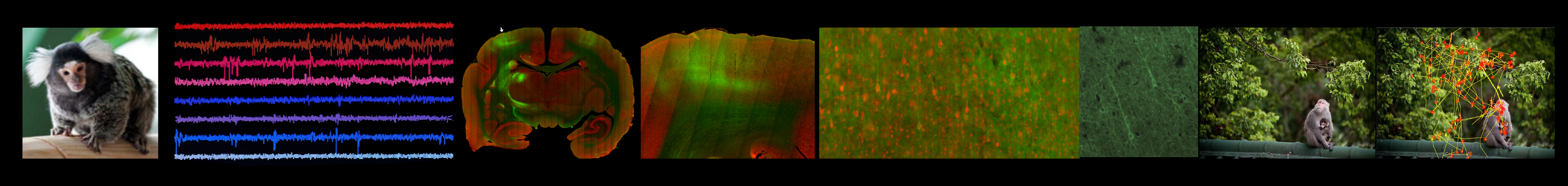

Figure 5. Marker-free tracking of reach-to-grasps for dynamic prey. A: Multiple high speed cameras track unrestrained reaching for live crickets in a video arena. B: Tri-angulation from multiple views recovers 3D kinematics of reaching.

To examine the natural reach-to-grasp dynamics we have developed an ecologically motivated reach-to-grasp task with live crickets (Fig. 5A). We used multiple high-speed video cameras to capture the unrestrained movements. We can apply machine vision algorithms on the video to reconstruct 3D kinematics (Fig. 5B). Contrary to the estimates in traditional paradigms, we find that online adjustments operate at very short visuo-motor delays, rivaling the speeds typical of oculomotor pursuit. Multiple linear regression modeling of the kinematic relationships between the hand and cricket velocity revealed a visuo-motor delay below 100 ms (Shaw et al, bioXriv). In future research we also aim to test the role of cortico-cortical feedback projections from frontal to parietal areas in mediating predictions for fast online control. Another goal in this project is to develop head-mounted eye tracking to monitor binocular eye position and examine how eye movements guide predictive reaching.

As a final research area of future interest, we have begun a recent collaboration with Dora Biro’s laboratory at the UR which aims to extend the tracking technologies developed for unrestrained reaching into the domain of social interactions (UR Research Award, 2022). We are able to track multiple marmosets in a larger space as they interact during a group foraging to capture live crickets. This research is in its beginnings, but opens opportunities to examine how eye movements are used in social monitoring and cooperative group behavior.

How does marmoset visual behavior compare to humans?

How do top-down attention signals modulate early visual processing?

How do foveal representations support high acuity vision during eye movements?

What role does visual prediction play in guiding eye and hand movements?