How does marmoset visual behavior compare to humans?

To fully benefit from the neuroscientific advantages of studying vision in marmosets, there is a pressing need to develop reliable behavioral paradigms in this animal model. The first behavioral study in my laboratory highlights why it is critical to establish reliable behavior prior to neural investigation. We discovered that marmosets were unable to see fine details in displays for vision experiments because nearly all of them were near-sighted (Nummela et al, 2017, Dev. Neuro.). Behavioral estimates of marmoset visual acuity were measured using a Gabor detection task. We found impairments for distance vision and corrected them with a lens (i.e., marmoset glasses). It would have been impossible, for example, to study visual receptive field structure accurately without this correction.

We have also examined behavioral measures of motion processing in marmosets and humans in recent studies. We focused on motion processing because much is known about its behavioral and neural responses, and motion stimuli afford precise control of signal reliability. We have established two behavioral paradigms, one that provides analog perceptual reports of motion direction (Cloherty et al, 2020, Cerebral Cortex) and a second that uses automatic read-outs from smooth eye movements (Kwon et al, 2019, JOV; Kwon et al., 2022, Elife). These paradigms are critical to the work presented in the subsequent sections.

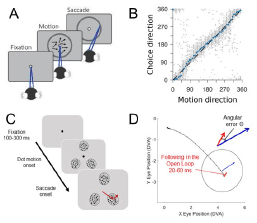

Figure 2. Behavioral measures of motion processing in marmoset monkeys. A: An analog motion estimation task in which marmosets view a foveal motion stimulus and report its direction by making a saccade to a peripheral ring. B: Saccade choices accurately reflect the true motion direction. C: A foraging task in which marmosets make a saccade to one of three peripheral motion apertures, one that lies within the potential receptive fields of neurons under study. D: Post-saccadic smooth eye movements (red trace) track stimulus motion direction (blue arrow) in the peripheral aperture selected.

The first approach used a motion estimation task with analog reports to probe perception (Cloherty et al, 2020, Cerebral Cortex). Marmosets viewed random-dot motion stimuli at fixation and then reported perceived direction by making a saccade on a peripheral ring to where motion was directed (Fig. 2A). After training their choices accurately aligned with the stimulus direction (Fig. 2B). By varying signal strength (motion coherence) we also find similar speed-accuracy trade-offs as reported previously in macaques and humans.

A second approach to obtain a behavioral read-out of motion exploits post-saccadic following responses (PFR). We discovered PFR in humans while studying pre-saccadic attention (Kwon et al, 2019, JOV). PFR is an automatic following movement for the motion of the saccade target. We measure PFR in a saccade foraging task. Marmosets fixate centrally and then a set of three motion apertures appear peripherally, one which is selected by a saccade (Fig. 2C). Reward is given for selecting a different aperture than the previous trial to encourage foraging. We find a following response for target motion after the saccade in an early open-loop period (20-60ms). The following has a low gain compared to the actual target velocity (red compared to blue vector) but reliably indicates motion direction (Fig. 2D). Further, the strength of gain for the target, as compared to the other apertures, provides a behavioral index of pre-saccadic attention (Coop et al, PFR, bioXriv). We have used this task to test neural mechanisms of pre-saccadic attention (discussed in the following section). In subsequent work with humans we found that the PFR can be disassociated from perceptual reports (Kwon et al, 2022, Elife), much like other smooth eye movements. This suggests that independent pathways may be used read-out motion information for action and perception. Because we have established these behavioral measures, we are able to study and manipulate the different neural pathways involved.

How does marmoset visual behavior compare to humans?

How do top-down attention signals modulate early visual processing?

How do foveal representations support high acuity vision during eye movements?

What role does visual prediction play in guiding eye and hand movements?